There is a lot of articles out there explaining how to setup a Kubernetes cluster at home, but I wanted to write my take on that, and actually explain how to setup a Kubernetes cluster in the most simplest way possible, for people that just have the basic knowledge about Kubernetes and want to start using a cluster.

So, in this article I will make use of the incredible `k3’s’, also called `lightweight Kubernetes` as for me is the most simple and interesting way of setting Kubernetes in Raspberry. Rancher Labs Kubernetes distribution (fully certified ) that is production ready and design specially for environments with resource constraints, for example ioT or edge computing appliances present in trains, satellites, boats etc… The solution is specially optimized for CPU’s with arm architecture (ARM64 and ARMv7) each makes it a great fit to use with raspberries.

K3’s pack’s the Kubernetes components in a single binary so everything runs under one OS process, this one of the reasons each makes it super lightweight. But it doesn’t pack only the vanila kubernetes components but also other technologies needed to really make a functional Kubernetes like a CRI and a CNI or a ingress controller. The package includes technologies like `containerd`, `flannel`, `SQLite` for the datastore, `Traefik` as the ingress controller and `coreDNS` for DNS and even `Helm-Controller` that allows manifest way of deployment. These technologies can be disabled or swapped out if you so each. It has also load balancer service that connects the Kubernetes cluster to the host’s ip.

Apart from that K3s also allows you to :

– Manage the TLS certificates of the Kubernetes components

– Manage the connection between worker and server nodes

– Auto-deploy Kubernetes resources from local manifests in realtime as they are changed.

– Manage an embedded etcd cluster if you wish to use etcd

The tools needed :

- Raspberry pi 5 8GB Ram

- microSD SanDisk Extreme Pro 667x 32GB 100MB/ UHS-I class U3

- Raspberry pi 4 model B, 8GB Ram

- justPi microSD 32GB 100MB/s class 10

The steps :

- Image ubuntu to both microSD cards :

- Choose ubuntu server

- Configure hostname, wi-fi, ssh with key

- Choose the master node, setup with ssh

- Update packages list

- Install docker

- Set up cgroups using the kestrel flags

- Install K3s

- Check that the service

k3s-server` is running, if not, restart it. - Check the cluster (as a single-node cluster)

- Choose the worker node :

- Update packages list

- Install docker

- Test if the worker node can reach the master node

- Install K3s as worker node

- Check the agent is working

k3s-agentif not, restart it

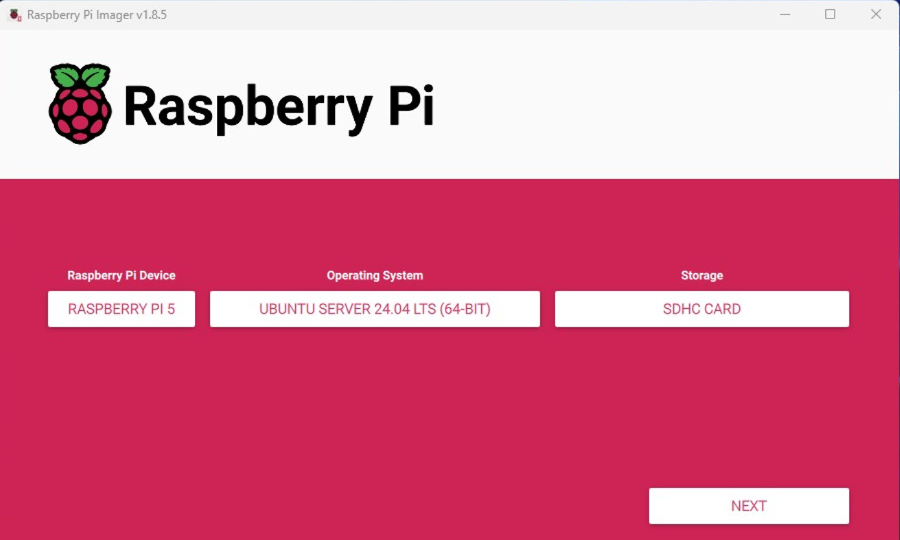

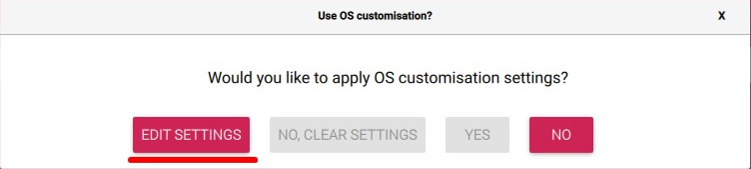

Image ubuntu

In my case i used Raspberry Pi Imager to image ubuntu into my sd cards. I choose the bare metal version of ubuntu. When you choose this version in the Raspberry Pi Imager you can do some very cool customizations that can save time. You can :

- You can choose the hostname

- Configure wireless LAN

- Set the region and keyboard layout

- Setup ssh with your pair of keys

Is important to choose adequate and unique hostnames in my case i choose k3s-node-1 e k3s-node-2.

Setup static ip

After imaging ubuntu to both sd cards we want to make sure the ip of both master and worker node will be static. For that we can make use of our router ui by checking the current list of active devices in our network. We can them insert the sd cards in both raspberries and turn the on. When they will join our local network we will see them in the list and we can make their ip static.

Test ssh communication

Now let’s see if we can reach those machines/raspeberries and we can ssh into them :

# see that i we can reach both nodes

> ping 192.168.50.51

> ping 192.168.50.217

# create ssh tunnel

> ssh 192.168.50.217

## if okay, them exit

> ssh 192.168.50.51

## if okay, them exit To make things easier i make use of the config file with the ssh tool. Like so :

Host k8s-node-1

Hostname 192.168.50.51

IdentityFile id_ed25519

ServerAliveInterval 60

ServerAliveCountMax 120

Host k8s-node-2

Hostname 192.168.50.217

IdentityFile id_ed25519

ServerAliveInterval 60

ServerAliveCountMax 120Setup master node

So, now is time to setup our master node. This is what we need to setup :

- Install docker ( Set Kernel flags for container runtime if needed)

- Install k3s – master mode

Instal docker

# update list of available packages in the machine

> sudo apt update

# upgrade all the packages

> sudo apt upgrade

# pull and install docker

> sudo apt install -y docker.io

> sudo docker info

# Do you see something like this :

## WARNING: No memory limit support

## WARNING: No swap limit support

## WARNING: No kernel memory limit support

## WARNING: No kernel memory TCP limit support

## WARNING: No oom kill disable support

# if yes them we need to set kestrel flags for docker

sudo sed -i \

'$ s/$/ cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=1 swapaccount=1/' \

/boot/firmware/cmdline.txt

# finally we have to reboot

> sudo reboot Install k3s – master mode

# install k3s

# download the script and run it using piping

# k3s will be install with default configuration

> curl -sfL https://get.k3s.io | sh -After issuing the above command, k3s will be install using the default configuration and the script will output the several steps of installation :

#...

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3sAs we can see everthing is packaged in a single systemd process. After installation we can check the status :

# check the status of systemd process responsible for k3s

> systemctl status k3sThem we can check if we have the cluster already (a single node cluster in this case). Kubectl is installed by default, so we can use it right away.

> sudo k3s kubectl get nodesFinally, we need to retrieve a token each will be use to connect our worker node to our master node for that we can do :

# get the cluster token to be used to configure worker node

> sudo cat /var/lib/rancher/k3s/server/node-tokenAnalyzing K3s objects

Let’s check all the kubernetes objects that k3s installs and setup behind the scenes to make a full functional cluster.

> sudo kubectl get all -n kube-system[secondary_label Output]

NAME READY STATUS

pod/local-path-provisioner-... 1/1 Running

pod/coredns-... 1/1 Running

pod/helm-install-traefik-... 0/1

pod/helm-install-traefik-... 0/1

pod/metrics-server-... 1/1 Running

pod/svclb-traefik-... 2/2 Running

pod/traefik-... 1/1 Running

NAME TYPE CLUSTER-IP

service/kube-dns ClusterIP 10.43.0.10

service/metrics-server ClusterIP 10.43.69.115

service/traefik LoadBalancer 10.43.149.125

NAME DESIRED CURRENT READY UP-TO-DATE

daemonset.apps/svclb-traefik-... 1 1 1

NAME READY UP-TO-DATE

deployment.apps/local-path-provisioner 1/1 1

deployment.apps/coredns 1/1 1

deployment.apps/metrics-server 1/1 1

deployment.apps/traefik 1/1 1

NAME DESIRED

replicaset.apps/local-path-provisioner-... 1

replicaset.apps/coredns-... 1

replicaset.apps/metrics-server-... 1

replicaset.apps/traefik-... 1

NAME COMPLETIONS DURATION

job.batch/helm-install-traefik-crd 1/1 28s

job.batch/helm-install-traefik 1/1 31s

Setup worker node

Now, setuping up the worker node is really easy, we just need to run the same command but pass some parameteres :

# update list of available packages in the machine

> sudo apt update

# upgrade all the packages

> sudo apt upgrade

> curl -sfL https://get.k3s.io | K3S_URL=https://[MASTER_NODE_IP]:6443 K3S_TOKEN=[CLUSTER_TOKEN] sh -You should see output like this :

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agentLet’s also confirm that we have the agent running :

> sudo systemctl status k3s-agentManage the cluster from your laptop

Instead of ssh into the master node and manage the cluster from there, let’s make it easier and actually install kubectl in our laptop and point it to the master node.

In my case i using windows and i will use Chocolatey package manager to install kubectl from powershell core :

# install chocolatey package manager

> Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))

# check that is working and each version we have

> choco -v

> choco install kubernetes-cli

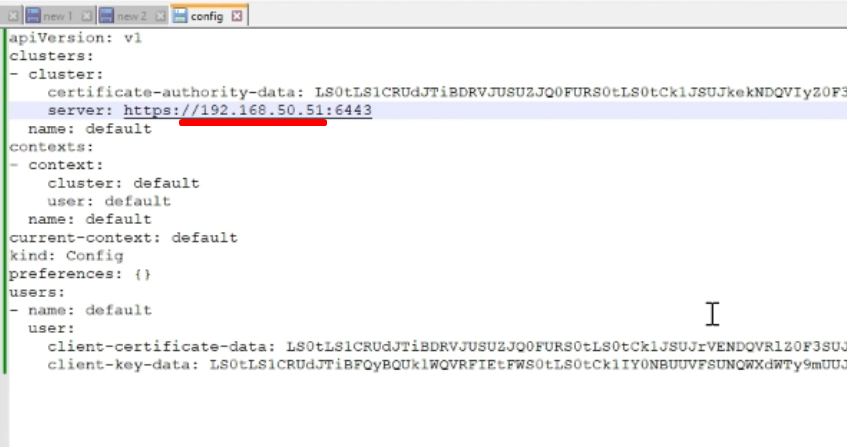

> kubectl versionNow, let’s copy the kubeconfig file generated by K3s, this is the easiest way. SSH into the master node, run the following command and copy the output .

> sudo cat /etc/rancher/k3s/k3s.yamlIn our laptop :

> cd C:\Users\[your-user-name]\.kube\

> notepad++.exe .\configPaste the contents. Correct the server url. You should have something like this :

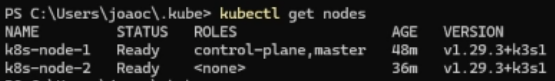

Finally we can use kubectl from our laptop and check out our cluster 🙂

> kubectl get nodes

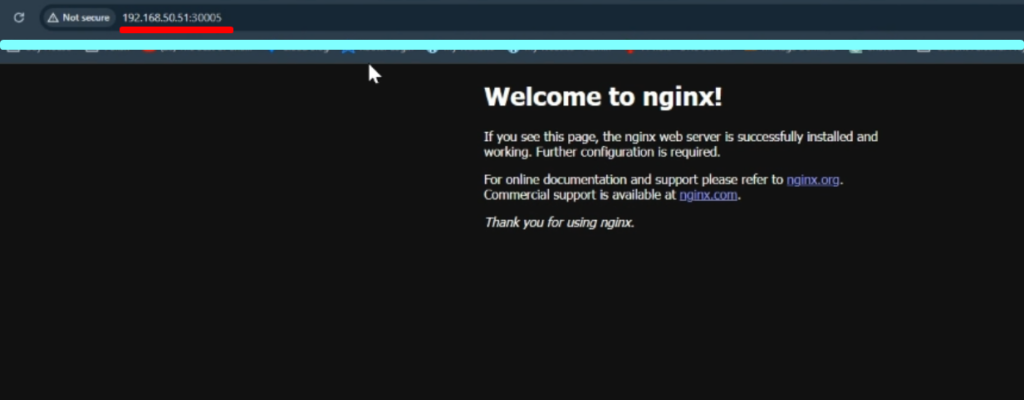

Deploying NGINX in K3s

To test our cluster let’s just deploy nginx, having 2 replicas.

For that i define a deployment :

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: backend

spec:

containers:

- name: nginx-container

image: nginx

replicas: 2

selector:

matchLabels:

type: backend Now, let’s deploy :

> kubectl apply -f .\deployment-nginx.yaml

# check that is there

> kubectl get all Finally, let’s create a node service to be able to access the nginx ( we will access the welcome page). Here is the definition :

apiVersion: v1

kind: Service

metadata:

name: myapp-service

labels:

name: myapp-service

type: backend

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30005

selector:

matchLabels:

type: backend> kubectl apply -f .\service-nginx.yaml

# check that is there

> kubectl get all

Leave a Reply